The study and potential of genomics has been revolutionized through the growing ubiquity of next-generation sequencing (NGS) platforms, with their throughput, speed, and accuracy providing a detailed look at patterns and mechanisms of gene expression, and a meticulous examination of polymorphisms with profound impacts on health and disease. Accordingly, a consistent 20 percent annual market growth of NGS technologies projects its revenue to expand to $16 billion by 2024. It diverges from the historical arc of other technologies, however, in that a detailed study of the metaphorical trees has preceded an accurate look at the breadth and character of the forest.

Second-generation sequencing began with the commercialization of 454 pyrosequencing by Roche in 2005, and Illumina/Solexa massively parallel sequencing in 2007. Upon these now-maturing frameworks were built vast and contemporary consortia to expand upon the knowledge gained in the Human Genome Project, and to exploit attendant increases in genomics capability, accessibility, cost-effectiveness and, therefore, scale. For instance, The Cancer Genome Atlas is a collaboration between the National Cancer Institute and the National Human Genome Research Institute that has used NGS to assemble a 2.5-petabyte (2.5 x 1015 bytes, or 2.5 million GB) public database and create a comprehensive map of 33 different cancers from more than 20,000 patient samples. Robust funding and wide access to NGS technology and patient volunteers allows the rapid and accurate identification of single-nucleotide polymorphisms in important genes. Such studies can categorize or discover genetic variants and inform appropriate medical decisions, such as selecting between FDA-approved treatment regimens for well-studied variations, or advising entry into clinical trials based on novel genotypes.

The goal is to propel basic and translational research into effective precision medicine solutions, and in 2018, at least 35 peer-reviewed journal articles were published using its data. Similar or supplementary projects have taken hold in the United States and European Union countries. The National Institutes of Health has established genome centers at Baylor University, the Broad Institute, and University of Washington with the goal of enlisting up to one million volunteers in NGS-based precision medicine studies, especially for particular risk genes in historically underrepresented populations. The UK National Health Service has developed Genomics England with 13 associated genomic medicine centers, and is using Illumina-based NGS in a proof-of-principle study for the scalability of diagnostic sequencing, with more than 100,000 genomes. France is separately using the Illumina NovaSeq NGS platform to sequence 18,000 full genomes by 2021.

Related Article: Innovations in Next-Gen Sequencing

In short, NGS is doing a very good job—and will continue to do so in the near future—of identifying molecular barriers to health across large populations and whole societies. Especially in the study and treatment of cancer, NGS is adept at identifying minute genetic variations, or employing single-cell sequencing to discover medically relevant heterogeneities in cancer cell-immune cell interactions. However, when it comes to whole genomes, NGS simply misses a lot of information. A Johns Hopkins University study sequenced genomes of pan-African origin up to 40x coverage and then analyzed the accuracy of reference genomes, finding that approximately 300 million base-pairs did not align. In the creation of de novo genome assemblies, investigators focus on three C’s: contiguity, completeness, and correctness. While NGS is often inadequate on these counts, third generation sequencing (TGS) can improve all three. The third generation is distinct from the next generation in that it has the capability to sequence very long reads—while NGS typically sequences nucleic acids in 150-250 base-pair fragments labeled during library preparation with adapters, fundamentally different electrochemical and base-calling technologies allow TGS to perform reads of up to 100-kilobase sequences. At the single gene level, these enhanced capabilities enable TGS to: 1) sequence many whole genes and identify novel isoforms, and 2) recognize genetic variants that are obscure to short-read NGS technologies, including long GC-repeat-rich polymorphisms, insertions, and translocations that can have as-yet unrealized predictive power for disease diagnosis and etiology. At the genome level, TGS can: 1) simultaneously detect epigenetic modifications such as methylation by monitoring the time between base reads, and 2) provide improved long-range mapping quality for reference genomes and de novo genome assemblies. Therefore, TGS can give us a better view of the forest.

Pacific Biosciences single molecule real-time (SMRT) sequencing monitors fluorescently tagged nucleotides incorporated into template molecules, and among TGS platforms has the highest throughput and read length, but also the highest error rate. The real-time (“Read Until”) function inherent in TGS instrumentation provides support for target enrichment studies, in which an irrelevant sequence can be discarded as it is read, streamlining data collection and analysis. Independent investigators are augmenting “Read Until” capabilities through the use of algorithms with the potential to significantly improve target enrichment and read accuracies. Illumina TruSeq uses a synthetic read process, clonally amplifying and barcoding DNA fragments and assembling longer reads from short ones; throughput and read length both decrease while accuracy increases. Oxford Nanopore Technologies’ MinION measures disruptions in electric current as individual DNA molecules pass through a nanopore. Among the three platforms, MinION has the lowest current throughput and accuracy, limiting much of its use to assembly and characterization of microbial genomes, especially in target enrichment studies. However, its portability gives it field-level capabilities and therefore applications unavailable to other TGS platforms. MinION was intimately involved in characterization and management of the most recent West African Ebola outbreak, and has vast potential in the characterization of emerging zoonotic viruses such as SARS-CoV-2 (responsible for the COVID-19 pandemic), and just as importantly, whatever the next one may be.

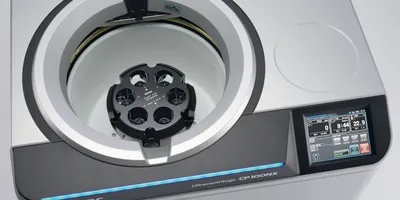

Future developments in TGS will have to include improving its accuracy and throughput. Moreover, improving its compatibility with magnetic bead-based automated library preparation steps will be a necessary component of increasing accuracy and reproducibility, by getting as many hands out of the master mix as possible. Finally, the best approach to genomic assembly and characterization may be a hybrid approach combining the base-calling accuracy and high throughput of NGS with the coverage via long reads of TGS to optimize both while minimizing the limitations of each.