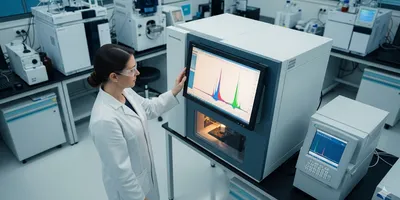

System suitability testing (SST) plays an indispensable role in the modern analytical laboratory. The journey from sample to result is a meticulous process governed by strict protocols and validated methods. Yet, even the most robust and well-defined method is only as good as the system that executes it. An instrument that has been calibrated and maintained can still experience subtle shifts in performance due to a variety of factors: a failing column, a minor temperature fluctuation, or a degradation of the mobile phase. These seemingly small issues can quietly compromise the integrity of an entire analytical run.

Before a single unknown sample is injected, an analytical chemist must confirm that the entire system—the instrument, the column, the reagents, and the software—is operating within the pre-established performance limits. Think of SST not as a redundant check, but as the final, critical gatekeeper of data quality. It is a proactive quality assurance measure that verifies the fitness-for-purpose of the entire analytical system on a day-to-day basis. This article will provide a comprehensive guide to the principles, parameters, and practical application of SST, empowering laboratory professionals to elevate the quality and reliability of their analytical work.

What is System Suitability Testing? The Pre-Analytical Check

At its core, system suitability testing is a formal, prescribed test of an analytical system’s performance. It is a one-time check that is run before an analytical batch of samples, and is not to be confused with method validation. While method validation, which includes concepts like robustness testing, proves that a method is reliable in theory, SST proves that the specific instrument, on a specific day, is capable of generating high-quality data according to the validated method’s requirements.

The test is performed by injecting a reference standard or a mixture of standards into the system. The response of the system to this test standard is then measured against a set of predefined acceptance criteria. These criteria are derived from the original method validation and are designed to ensure the system is operating within a range that will produce accurate and precise results for the samples to be analyzed. If the system passes the test, the analytical run can proceed. If it fails, the run is halted, and the source of the failure must be identified and corrected before any further work is done.

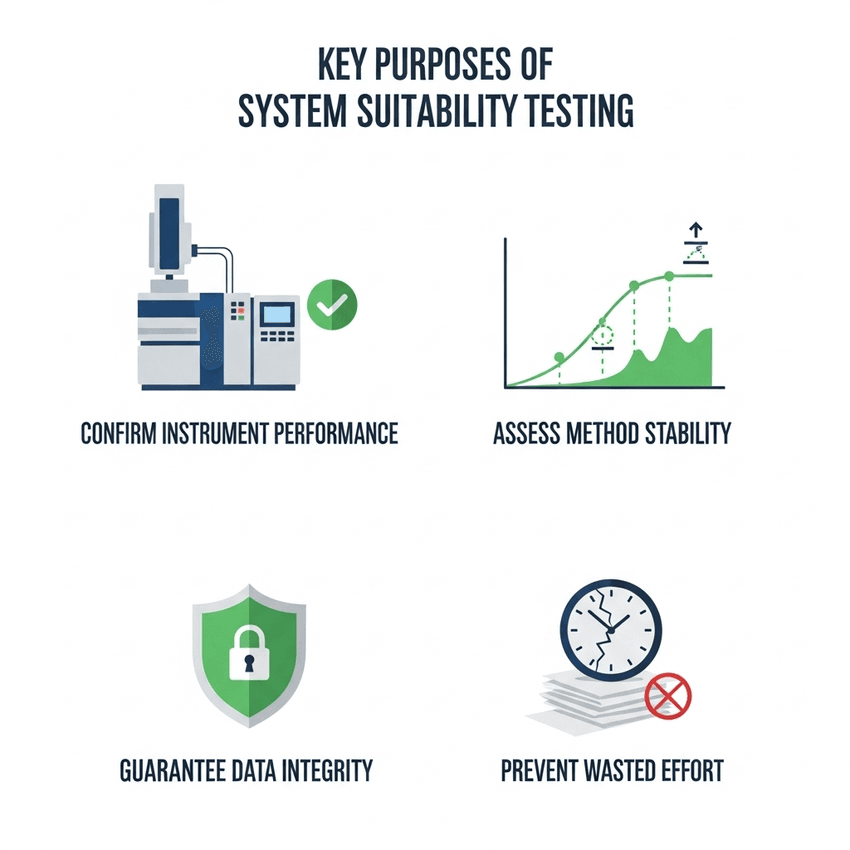

The key purpose of system suitability testing is to:

System suitability testing has several key purposes.

GEMINI (2025)

- Confirm Instrument Performance: Verify that the instrument (e.g., HPLC, GC) is functioning correctly in real-time.

- Assess Method Stability: Check for any degradation in reagents, mobile phase, or the analytical column.

- Guarantee Data Integrity: Provide documented evidence that all analytical results were generated under conditions that met the required performance standards.

- Prevent Wasted Effort: Avoid the costly and time-consuming process of analyzing samples only to find out later that the system was malfunctioning.

By making system suitability testing a standard part of your workflow, you create a documented chain of evidence that every result produced is from a system that was verified to be fit-for-purpose.

Critical Parameters for System Suitability Testing

The parameters evaluated during system suitability testing are carefully chosen to reflect the most critical aspects of the chromatographic separation. These metrics quantify the quality of the separation, the efficiency of the column, and the reproducibility of the instrument. While the specific parameters may vary depending on the method, the most common ones include:

1. Resolution (Rs) Resolution is a measure of how well two adjacent peaks are separated. It is a critical parameter, especially in complex matrices where compounds of interest are close to impurities or other components. The acceptance criteria for resolution are typically set during method validation, and the SST test ensures that the separation is still meeting that standard.

2. Tailing Factor (T) or Asymmetry Factor (As)This parameter measures the symmetry of a peak. An ideal, perfectly symmetrical peak has a tailing factor of 1.0. A factor greater than 1.0 indicates peak tailing, which can be caused by column degradation or interactions between the analyte and the column. Poor peak shape can lead to inaccurate integration and quantification.

3. Plate Count (N) Also known as column efficiency, the plate count is a measure of the number of theoretical plates in a column. A higher plate count indicates a more efficient column and, therefore, a better separation. Over time, the efficiency of a column can decrease. An SST test ensures that the column is still performing above a minimum required plate count.

4. Relative Standard Deviation (RSD) or %RSD This is a measure of the reproducibility of the instrument. It is calculated from multiple injections of the same standard. A low %RSD indicates that the instrument is providing consistent, reproducible results, which is essential for accurate quantification. A typical acceptance criterion for %RSD is less than 1.0% or 2.0% for replicate injections of a standard solution.

5. Signal-to-Noise Ratio (S/N) This parameter assesses the detector’s performance. It is the ratio of the peak height of the analyte to the height of the background noise. A minimum S/N ratio is typically set to ensure the method is sensitive enough for the application, especially for trace-level analysis.

By monitoring these key parameters during system suitability testing, laboratories can quickly identify and address potential problems before they affect sample analysis.

Practical Implementation: From Test to Action

A successful system suitability testing protocol is not just about running a test; it’s about having a clear plan for interpreting the results and acting on them.

Develop the SST Protocol: During method validation, define the specific parameters to be tested, their acceptance criteria, and the frequency of the test (e.g., at the beginning of each run, every 24 hours, or after a specific number of injections).

Prepare the SST Solution: Use a reference standard or a certified reference material. The concentration of this standard should be representative of a typical sample concentration and should be prepared accurately and precisely.

Perform the Test: Inject the SST solution and allow the system to run according to the method. It is common to perform 5-6 replicate injections to assess reproducibility.

Evaluate the Results: The software should automatically calculate the SST parameters and compare them against the pre-defined acceptance criteria. The results are typically displayed in a report or a dashboard.

Act on the Outcome:

- If the system passes: Proceed with the analysis of the unknown samples.

- If the system fails: Immediately halt the run. Do not proceed with sample analysis. A systematic troubleshooting process must be initiated to identify the root cause of the failure. This could involve checking for air bubbles, regenerating or replacing the column, preparing fresh mobile phase, or performing instrument maintenance. Once the issue is resolved, the SST must be re-run and passed before the analysis can continue.

Adhering to this process ensures that every result is generated on a system that is demonstrably suitable for the task. It transforms data reporting from a simple output to a statement of qualified confidence.

Beyond Compliance: The Return on Investment

For laboratory managers and professionals, the true value of system suitability testing extends far beyond mere regulatory compliance. While organizations like the FDA and ICH mandate some form of SST for regulated analyses, its practical benefits are a powerful return on investment.

A failed SST test is not a setback; it is a prevention of a much larger problem. It prevents the need to re-run an entire batch of samples, saving valuable time, reagents, and labor. By catching a system issue early, it protects the integrity of the data and avoids the costly and time-consuming investigations into out-of-specification results.

Furthermore, a well-documented system suitability testing program builds an unassailable foundation of confidence in your data. In an era where data transparency and quality are paramount, the ability to demonstrate that every data point was generated under optimal conditions is a competitive advantage and a mark of analytical excellence. It is the final, crucial step in the analytical chain that connects a theoretically sound method with a practically perfect result.

FAQ: System Suitability Testing

What is the primary purpose of system suitability testing?

The primary purpose of system suitability testing is to verify that an entire analytical system, including the instrument, column, and reagents, is performing according to a validated method's requirements immediately before a batch of samples is analyzed.

What are the key parameters to monitor during system suitability testing?

Key parameters typically include resolution (Rs), tailing factor (T), plate count (N), and the relative standard deviation (%RSD) for replicate injections. These metrics assess the quality of separation, column efficiency, and instrument reproducibility.

When should system suitability testing be performed?

System suitability testing should be performed at the beginning of every analytical run. For long-running batches, it may also be performed periodically throughout the run to ensure continued system performance.

What should be done if the system fails a suitability test?

If a system fails system suitability testing, the analytical run must be stopped immediately. The root cause of the failure must be investigated and corrected. Only after the system passes a re-run of the suitability test can the analysis of unknown samples proceed.