Surface Plasmon Resonance (SPR) has become an essential tool in drug discovery for characterizing biomolecular interactions with speed and precision. In this Q&A, Anthony (Tony) Giannetti, PhD, associate director, applications at Carterra, discusses high-throughput SPR (HT-SPR) and its newfound prominence in enabling more therapeutics to enter the clinic.

Can you provide a brief explanation of Surface Plasmon Resonance (SPR) and how it works?

In drug discovery, traditional low-throughput SPR platforms and the newer HT-SPR platforms monitor biomolecular interactions in real-time. The primary benefit of HT-SPR is that it enables 100 times the data in 10 percent of the time with only one percent of the sample used by other label-free platforms. Most molecular-binding assays focus on the endpoint of a binding reaction, which is where the assay has come to thermodynamic equilibrium. But there’s more. The equilibrium state is the end of the story; it’s what will eventually happen when these two molecules interact. What we also want to know is the how of the story. What is the pathway that these molecules took to associate with each other as well as come apart again? This is the domain of binding kinetics. How quickly will they run toward each other, and how long will they stay together once they interact?

Anthony Giannetti, PhD

SPR biosensors are made from a very thin layer of gold deposited on a piece of glass. On top of that glass, a microfluidic flow cell is docked through which various solutions are passed over the surface. The gold layer has a hydrogel on it made of a flexible polymer where one binding partner is attached to keep it near the surface. This is called ligand deposition, and the hydrogel allows this ligand to move around in solution similarly to its natural environment. The second binding partner, called the analyte, is then introduced and flows over the ligand giving them time to associate and form complexes. After some time, the flow is rapidly switched to a solution with no analyte, which washes away any analyte that dissociates from the ligand.

During this process, the gold is illuminated from the other side by a laser incident at the total-internal-reflectance angle. This angle is extremely sensitive to changes in the refractive index at the fluid/ligand interface because of the phenomena of surface plasmons, which arise from the laser exciting electrons in the gold to create an electric field. The important part to understand, though, is accumulation of analyte, even just a little, on the surface changes the angle of the reflected light, which allows for the quantitation of exactly how much analyte is concentrating near the surface, and similarly, how quickly it is depleted during wash out. From this, numerous quantitative and qualitative observations about the binding reaction are determined.

Why has high-throughput SPR become increasingly important for laboratories?

Historically, biochemistry and biophysics have focused on characterizing one or two proteins at a time in great detail. After all, that’s how we came to understand the metabolic pathways. What’s happening now is new discoveries can and are being contextualized in the greater biological milieu where all those processes happen simultaneously to support life. That broader understanding is key to ensuring that new therapeutics and therapeutic approaches are targeted at modulating disease states with minimal impact on the healthy states. Advances in all areas of biological research including NGS, CRISPR, and high-throughput screening/cloning/protein expression/crystallography/Cryo-EM, are all contributing to the bigger picture. HT-SPR data joins with these and other approaches to study how the myriads of protein-protein/nucleic acid/carbohydrate/post-translational modifications/small-molecule interactions contribute to the regulation of cell biology and it's my hope that together these technologies will soon help us understand pathway biology as well as we have come to understand metabolic regulation.

How does SPR complement other analytical techniques used for molecular interactions?

All researchers need reliable decision-driving data every step of the way. Every experiment you do tells you something, and every technique you use tells you something in a different way. The trick is to find the minimal amount of experimentation that lets you make the best decision on next steps without entering cycles of analysis paralysis. Biophysical techniques provide rich information about molecular behaviors and combining them helps rapidly paint a more complete picture. I’ve found that combinations of SPR with NMR, ITC, X-ray crystallography, and ultracentrifugation have been extremely informative in understanding molecular properties and reagent quality, as well as dissecting unexplained behaviors. Many biophysical methods have undergone rapid development in their throughput and reduction to practice over the last 20 years, and each time those advances resulted in wider adoption, use, and contribution to academic and industrial research. I have personally seen many times when combining information from these disparate approaches resulted in cooperatively far more understanding than any of the data provided individually, and I hope progress will continue until all these techniques are employed as widely and regularly as ELISAs and Western blots.

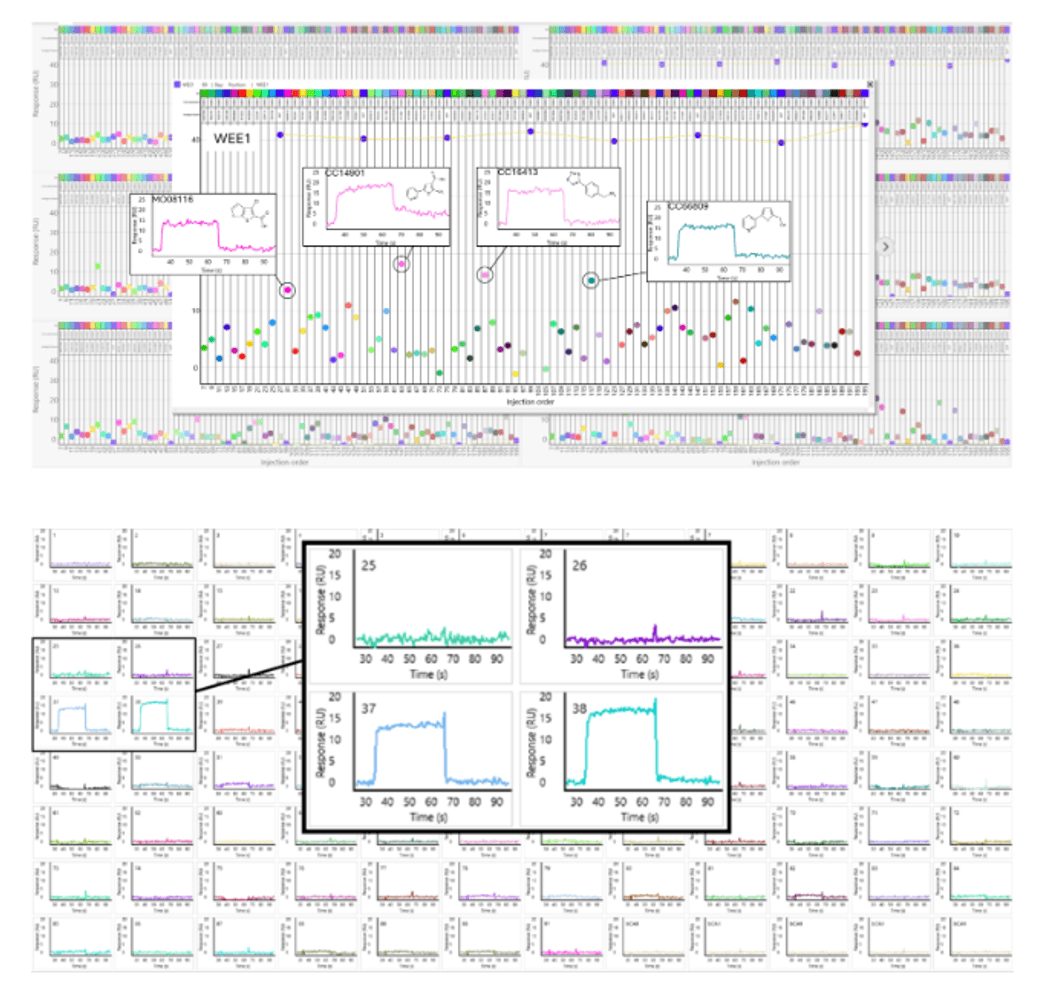

Fragment hit identification against 96 proteins using high-throughput SPR technology.

Credit: Carterra

How has SPR instrumentation evolved over the years to become more accessible to labs of different sizes and budgets?

SPR is a particularly flexible and powerful technique that is suited to many kinds of biomolecular interaction analysis. This makes such instrumentation valuable at any price point. The most expensive part of any research endeavor is time, meaning that saving money on the purchase price for lower-end instrumentation continuously costs you throughout the lifetime of the instrument. It forces you to piecemeal your way through your samples rather than get the full picture in one go. HT-SPR’s high-resolution data enables a more complete picture upfront while simultaneously reducing the cost per data point by monitoring analyte binding to hundreds of ligand proteins, instead of just a few. Spending a bit more up front to get the data now (instead of weeks or months from now) ultimately garners substantial savings on your investigative and development processes. That’s valuable, whether you’re spending your startup investment capital or trying to get sufficient results to renew your grant. When I led a SPR-based small-molecule discovery team, we were always looking for ways to turn around larger sample sets faster for chemists so they could get more design-data-new design iterations each year. It’s not just the cost of the instrumentation; it saves you the most by making better use of the up-stream and down-stream resources, keeping your processes moving, and getting to your papers or patents sooner.

How will new AI tools impact the use of SPR in labs?

AI models need lots of data to train and HT-SPR generates a lot of data…and I mean A LOT. A single injection can generate 100-400 association and dissociation phase observations, and each of those can be characterized by numerous quantitative and qualitative parameters. Various automated tools exist that provide data fitting and quality control assessment, but in time, I expect AI-based tools to emerge that provide superior performance. Once uploaded to databases, I believe AI will prove to be excellent at finding nuances in the SPR results and correlations to other data providing for a more comprehensive understanding of the interactions. Correlation, of course, is not causation and AIs tend to generate a lot of hypotheses that need testing. Again, HT-SPR can close the loop with its ability to test many hypotheses in parallel. Shortening cycle times between prediction, experiment, and retraining is key to gaining the full value of what AI has to offer.

For example, AIntibody.org has issued a series of AI challenge studies around using NGS databases to understand and improve antibody binding. Participants in the most recent ones submitted ~600 new antibody sequences and HT-SPR was chosen as the assay to provide experimental characterization of all of them because of the rich binding quality and kinetic data it can provide over lower resolution tools like ELISA.

Are there any emerging trends or novel applications that could further expand the use of SPR in the coming years?

SPR itself is a fairly mature gold-standard technology that has demonstrated many ways to provide value in academic and industrial research. What we see now is the opportunity for high-throughput SPR on large ligand arrays to multiply that value proposition 100-fold or more by generating the desired data in parallel at a scale not previously achieved. The benefits of traditional SPR, such as extremely low sample usage, are amplified by these arrays.

Almost all biological processes involve the interactions and communication between many molecules, such as all the Fc receptors to antibodies, the extensive combinations of peptide/MHC/T-cell receptor interactions required for effective CART therapy, on- and off-target interactions with the ~400 kinases or the ~600 E3 ligases in the human proteome, etc. The ability of HT-SPR to survey the interactions of analytes with hundreds of different binding partners simultaneously will speed researchers’ abilities to develop a more comprehensive understanding of systems with many inputs and outputs. This will drive biological insights, screen drug candidates more quickly, and understand drug candidates’ interactions with patient’s biology so therapeutics can be safer and more effective with less attrition during clinical trials.

What advice would you give to labs considering integrating high-throughput SPR into their workflows?

It’s important for groups using this technology to consider the impact on their upstream and downstream processes so they can take full advantage of what HT-SPR has to offer. As with all methods, the sample preparation workflows that serve you well at smaller-scale break down when you try to scale up a few fold, much less 100-fold or more.

Learn from your instrument demonstration activities and take some time to redevelop your processes so that you can “feed the beast.” It’s sort of like installing an oven that can bake 100 cakes at once. It’s easy on paper to scale the recipe 100-fold and say, “Ok, we need 200 cups of sugar, 12.5 gallons of milk, 17 dozen eggs,” but already you’re in trouble if you don’t prepare the rest of your kitchen with the right amount of storage and new equipment to handle all of that. Taking time to reimagine and prepare your pipeline to make full use of HT-SPR will let you have all your cakes and eat them too.

Bio: Anthony Giannetti, PhD, associate director, applications, Carterra, got his start in biophysics and structural biology while earning his B.S. in chemistry at the University of California at Santa Barbara. He earned his PhD in biochemistry and molecular biophysics at the California Institute of Technology before joining Roche, Palo Alto, in 2005. Since then, he has worked at Genentech, Google[X], and their spin-out Verily, and contributed novel biophysical approaches in clinical pharmacology leading to the approval of Erivdge (visomodegib), the first treatment for metastatic basal-cell carcinoma. He brings to Carterra over 20 years of experience in the use of biophysical approaches to drug discovery.