The imperative for efficiency within industrial research labs has never been greater. It requires a keen focus on accelerating prototyping efforts and maximizing the value of subsequent pilot-scale testing activities. Streamlining these initial development phases is critical for reducing time-to-market for new products, processes, and devices, directly impacting a laboratory’s overall competitive standing and operational output. The successful integration of advanced computational methods with agile physical experimentation allows organizations to compress development timelines while maintaining scientific rigor. This guide provides actionable strategies for optimizing lab operations from concept validation through pre-commercialization scale-up.

Leveraging digital twins and simulations for rapid prototyping

Digital modeling and simulation capabilities allow for substantial refinement of initial concepts before resource-intensive physical prototyping commences. By creating a digital twin—a virtual replica of a physical process, product, or system—laboratory professionals can conduct thousands of virtual experiments. This happens in a fraction of the time and at a lower cost than bench-scale work. This approach quickly identifies design flaws, predicts system behavior under varying conditions, and optimizes parameter ranges.

Leading with computational analysis significantly shifts the balance of work toward the pre-physical phase. Key applications include:

- Process optimization: Simulating reaction kinetics, fluid dynamics (CFD), and heat transfer to establish optimal operating parameters for a prototyping reactor or device design.

- Material selection: Modeling the physical and chemical interactions of candidate materials under expected stress and environmental factors, reducing the need for extensive empirical screening.

- Performance validation: Virtually testing the performance envelope of a prototype against specification requirements, such as efficiency, stability, or purity thresholds, before a single component is fabricated.

This digital-first approach prevents the iterative building, testing, and rebuilding loop often associated with traditional prototyping. When the physical prototype is finally created, it is already substantially de-risked. It is also closer to the final required performance specifications, accelerating the entire innovation timeline.

Implementing agile principles to streamline prototyping iterations

Traditional, sequential development cycles often introduce unnecessary delays when unexpected results require extensive backtracking. By adopting agile methodologies, commonly used in software development, industrial research labs can institute shorter, more focused sprints for physical prototyping iterations. This ‘fail fast’ philosophy ensures that valuable feedback is gathered continuously and integrated immediately, minimizing the investment in suboptimal designs.

An agile framework for prototyping includes specific components designed to maximize throughput:

Component | Description | Benefit in Prototyping |

|---|---|---|

Short Sprints (2–4 weeks) | Defined periods with specific, measurable goals for prototype modification or testing. | Maintains focus and promotes rapid decision-making. |

Minimum Viable Product (MVP) | The simplest possible prototype built to test a core hypothesis or function. | Reduces initial build time and resource expenditure on non-critical features. |

Integrated Feedback Loops | Daily or frequent brief meetings (stand-ups) involving design, engineering, and lab operations staff. | Ensures immediate communication of results and consensus on the next iteration step. |

Retrospectives | Reviews after each sprint to document what worked and what should be changed in the prototyping process itself. | Drives continuous improvement in laboratory efficiency. |

Implementing these principles requires cultural shifts in lab operations to embrace rapid iteration over perfection in early stages. It ensures that the transition from prototyping to pilot-scale testing begins with a highly validated, resilient design.

Ensuring data fidelity for seamless benchtop to pilot-scale testing scale-up

The transition from a successful prototyping stage at the benchtop to the pilot-scale testing phase is inherently complex. Scale-dependent phenomena are often unaddressed, which can lead to failure. To mitigate these risks, a rigorous focus on data fidelity and advanced data analytics is non-negotiable. Data generated during the initial, smaller-scale work must be robust, complete, and mathematically suitable for extrapolation models.

One effective strategy involves integrating Process Analytical Technology (PAT) principles, which enable real-time measurement and control of critical quality and performance attributes. Real-time data collection minimizes measurement error and provides a richer dataset for predictive modeling necessary for effective scale-up.

For instance, the scale-up factor often relies on ensuring geometric similarity or maintaining critical parameters like mixing intensity, shear rate, or surface area to volume ratio. A robust data analytics pipeline transforms the empirical observations from the small-scale prototyping effort into predictable, scalable relationships. This disciplined approach, where process understanding guides scale-up, is key to quality assurance and is formally recognized in various regulatory frameworks, such as the pharmaceutical industry. (ICH Q8, Pharmaceutical Development and Quality by Design principles)

Lab Quality Management Certificate

The Lab Quality Management certificate is more than training—it’s a professional advantage.

Gain critical skills and IACET-approved CEUs that make a measurable difference.

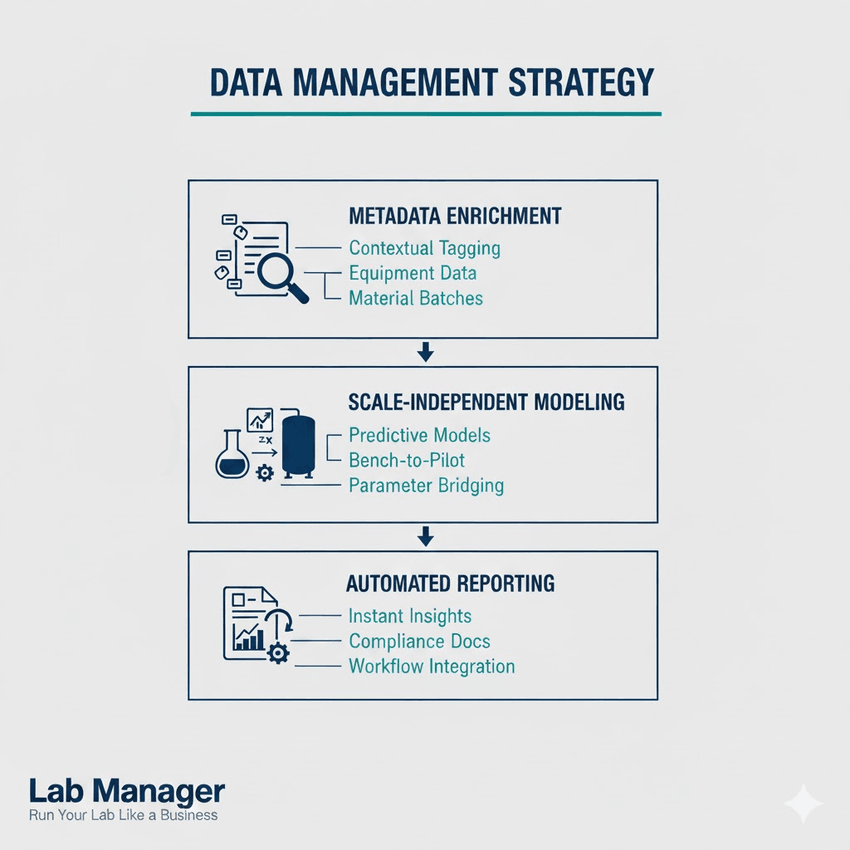

The data management strategy should support:

Stop struggling with data. Enrich, model, and automate for lab efficiency.

GEMINI (2025)

- Metadata enrichment: Tagging all experimental data with contextual information, including equipment calibration, material batch numbers, and exact environmental conditions.

- Scale-independent modeling: Developing predictive mathematical models that accurately bridge the gap between bench-scale parameters and pilot-scale testing requirements.

- Automated reporting: Using digital systems to automatically generate reports and variance analyses, highlighting areas where pilot-scale testing deviated from predicted outcomes.

This methodical data treatment significantly de-risks the capital investment associated with building a pilot-scale testing facility. It also accelerates the validation phase.

Designing modular and automated systems for efficient pilot-scale operations

Efficient pilot-scale testing requires platforms that maximize flexibility and minimize changeover time between experiments. Custom-built, single-purpose pilot plants are inherently slow and costly to modify. Conversely, employing modular and automated systems dramatically accelerates the testing process by allowing for quick reconfiguration and high-throughput experimentation.

Modular designs are often based on skid-mounted units or standardized interchangeable components. This approach allows lab operations teams to rapidly assemble new configurations or swap out components to test process variations. This avoids long periods of plant shutdown or modification associated with fixed infrastructure.

Automation is equally vital, particularly through Supervisory Control and Data Acquisition (SCADA) systems or other advanced process control solutions. Automation ensures highly reproducible operation and continuous data logging, which are mandatory for high-quality pilot-scale testing.

Advantages of automated, modular pilot-scale testing include:

- Reduced human error: Automated dosing, temperature control, and sampling eliminate variances introduced by manual intervention.

- 24/7 operation: The ability to run long-duration stability or endurance tests without continuous human supervision, increasing the data collection rate.

- Enhanced safety: Automated systems incorporate failsafe interlocks and remote shutdown capabilities, minimizing personnel exposure to potential hazards inherent in large-scale reactions. (OSHA Process Safety Management, 29 CFR 1910.119, applicable when handling threshold quantities of highly hazardous chemicals)

- Faster changeover: Standardized fittings and connections allow for accelerated cleaning and re-assembly between different pilot-scale testing campaigns.

These modernized lab operations maximize throughput and reduce the overall capital expense by leveraging flexible infrastructure across multiple projects.

Optimizing industrial research lab operations through accelerated R&D

Accelerating both prototyping and pilot-scale testing is fundamentally about optimizing lab operations through strategic integration of digital, agile, and modular methods. Initial success in prototyping is now highly dependent on leveraging computational tools to simulate performance, drastically reducing the number of physical iterations required. This efficiency is then carried through the scale-up process. Robust, high-fidelity data from benchtop experiments informs the design and operation of flexible, automated pilot-scale testing platforms. The result is a substantial compression of the product development cycle, enabling faster market entry and a more responsive research organization.

Frequently asked questions

What are the primary bottlenecks in accelerating prototyping workflows?

The most common bottlenecks encountered in accelerating prototyping involve the time and resource constraints associated with physical build-test-analyze cycles. Delays often stem from dependence on external fabrication, slow manual data analysis, and the absence of high-fidelity predictive modeling that could eliminate unnecessary, costly prototyping iterations.

How does the application of digital twin technology benefit pilot-scale testing?

Digital twin technology provides substantial benefits to pilot-scale testing by allowing laboratory professionals to simulate potential process variations, troubleshoot control strategies, and train operators virtually before engaging the physical pilot plant. This reduces commissioning time and minimizes the risk of expensive failures during active pilot-scale testing campaigns.

Which regulatory guidelines are most relevant to successful scale-up from prototyping?

Successful scale-up into pilot-scale testing often aligns with principles outlined in global regulatory frameworks, particularly those focused on quality assurance. A primary example is the International Council for Harmonisation (ICH) Q8 guideline on Pharmaceutical Development, which promotes a "Quality by Design" (QbD) approach that relies heavily on predictive modeling and robust process understanding derived from early prototyping and characterization studies.

What is the recommended keyword density for effective SEO optimization?

For effective SEO optimization in long-form technical articles, maintaining a primary keyword density between 0.5% and 3.0% is recommended. This range ensures that keywords such as prototyping and pilot-scale testing are naturally incorporated and appropriately visible to search engines without resulting in keyword stuffing, thus preserving the article's professional tone and readability.

This article was created with the assistance of Generative AI and has undergone editorial review before publishing.