The future of the analytical lab is being rapidly reshaped by the confluence of advanced data management, robust automation, and computational AI. Facing intense demands for speed, precision, and handling complex data, traditional manual workflows are becoming unsustainable in meeting modern regulatory and scientific output requirements. This convergence initiates a profound paradigm shift, restructuring the entire scientific pipeline from sample preparation and execution through to data interpretation and reporting. Successfully integrating these elements is essential for laboratory professionals to unlock unprecedented efficiency, generate previously unattainable insights from large, heterogeneous datasets, and maintain competitive advantage in this new era of science. Adopting these new digital standards is critical for the future-ready analytical lab.

Integrating Data, Automation, and AI for the Modern Analytical Lab

The effectiveness of any modern analytical lab hinges upon the seamless interaction between its data infrastructure, automated processes, and computational intelligence. These three components operate as interdependent pillars, where weaknesses in one area compromise the efficacy of the others. Data integrity serves as the essential foundation, ensuring that every measurement, transaction, and result is attributable, legible, contemporaneous, original, and accurate (ALCOA+).

Data Infrastructure as the Foundation

Before the full benefits of automation or AI can be realized, the laboratory’s data ecosystem must be unified and standardized. This involves moving beyond localized instrument data files to a centralized, cloud-enabled structure where data is captured directly from instrumentation in a format that is immediately machine-readable and contextually rich. Such a system facilitates comprehensive metadata capture—tracking the sample’s lifecycle, instrument parameters, operator identity, and environmental conditions. This rigorous data governance is necessary not only for regulatory compliance but also for training and deploying reliable AI models. Inadequate or fragmented data streams lead to "garbage in, garbage out," rendering subsequent investments in advanced technologies ineffective.

Interoperability Through Standardization

A significant challenge in legacy laboratories is the lack of common communication protocols between different manufacturers' instruments. Achieving true, end-to-end automation requires broad adherence to shared data formats and communication standards. Without instrument standardization, the automated transfer of samples, methods, and results between disparate systems remains cumbersome and prone to error. Successful integration promotes fully traceable and auditable data trails, which is crucial for analytical lab environments subject to Good Laboratory Practice (GLP) or Good Manufacturing Practice (GMP) regulations. A unified data fabric allows AI algorithms to access and correlate information across multiple experiments and instrument types, dramatically improving the scope of analysis.

The Role of AI in Data Optimization

Once a robust data foundation is established, AI becomes a powerful tool for enhancing operational efficiency and scientific discovery. Machine learning algorithms can be trained on high-volume datasets to perform tasks that are slow or prone to human bias, such as baseline correction, peak integration, and chromatogram review. This application of AI frees laboratory professionals to focus on complex problem-solving and experimental design, rather than repetitive data processing. Furthermore, AI can proactively monitor instrument performance, predicting maintenance needs before failures occur, thus maximizing the uptime of expensive analytical equipment within the analytical lab. The synergy of clean, standardized data and intelligent computational analysis is the defining characteristic of the future laboratory.

Next-Generation Automation: Achieving High-Throughput and Multi-Tech Workflows

The implementation of next-generation automation is essential for achieving the efficiency and scale required in modern analytical lab settings. This involves transitioning from simple, isolated automated steps to fully integrated, lights-out systems capable of managing complex, multi-tech workflows. The goal is achieving high-throughput screening and analysis while minimizing human intervention and variability.

The Evolution of Robotic Systems

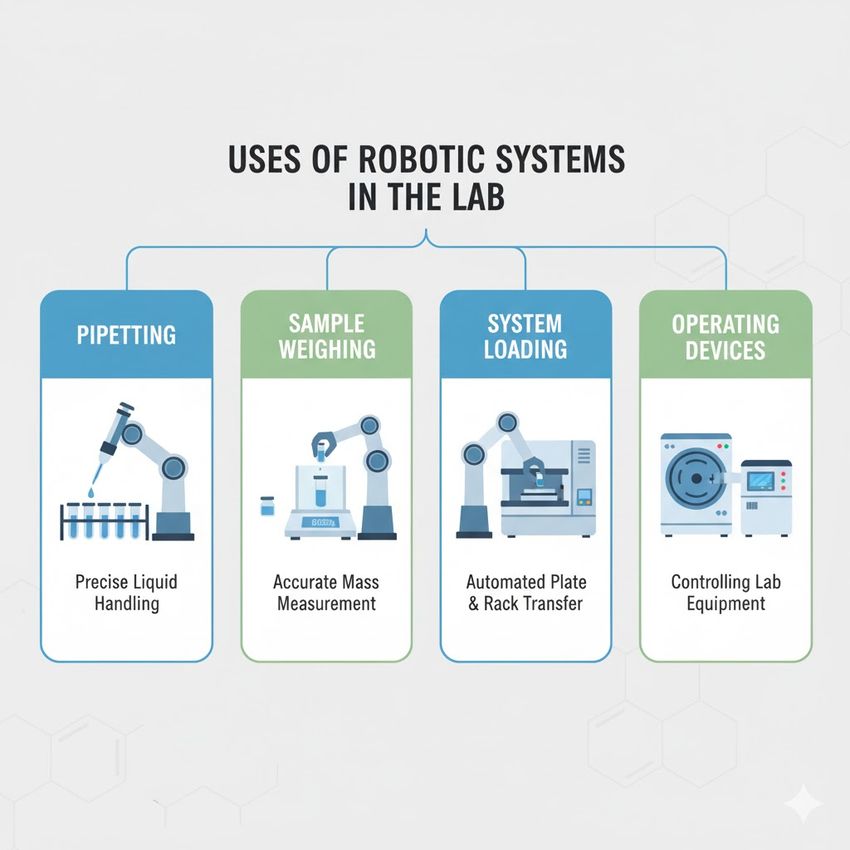

Contemporary laboratory automation extends far beyond basic liquid handling. Advanced robotic arms, sometimes referred to as 'cobots' (collaborative robots), are integrated with sophisticated vision systems and sensor technology. These systems are capable of performing highly nuanced tasks, including:

Robotic systems have evolved and show great promise for use in analytical labs.

GEMINI (2025)

- Precision micro-pipetting with dynamic volume adjustment.

- Sample weighing and solvent preparation with mass spectrometry feedback.

- System loading and unloading of consumables (plates, vials, columns).

- Operation of ancillary devices, such as centrifuges, shakers, and thermocyclers.

The shift toward modular, flexible automation allows systems to be reconfigured quickly to handle diverse applications, from drug discovery compound management to personalized medicine diagnostics. This adaptability is critical as scientific focus areas evolve rapidly.

Enabling High-Throughput and Parallel Processing

High-throughput methods are characterized by the ability to process a large number of samples, or complex analyses, in a short period. This often relies on miniaturization (e.g., 384- or 1536-well plate formats) and parallel processing. Automation ensures the repeatability and spatial accuracy necessary for these small volumes.

Feature | Traditional Workflow | High-Throughput Automated Workflow |

|---|---|---|

Sample Processing | Sequential, single-tube or vial | Parallel, multi-well plate format |

Method Execution | Manual instrument setup and run initiation | Automated scheduling via Laboratory Information Management System (LIMS) |

Throughput Rate | Low to medium (tens per day) | High to ultra-high (hundreds to thousands per day) |

Error Rate | Susceptible to human pipetting/dilution errors | Minimized by robotic precision and integrated quality checks |

The integration of automation with LIMS and Electronic Lab Notebooks (ELN) ensures that every high-throughput sample run is documented immediately, linking the resulting data directly back to its source well and experimental context. This immediate documentation is a major advantage for auditability and compliance.

Multi-Tech Workflows and Seamless Transition

The execution of a complex analysis often requires sequential use of multiple technologies, such as sample extraction (liquid handling) followed by chromatographic separation (HPLC) and detection (Mass Spectrometry). Multi-tech workflows involve the automated physical and digital transfer of the sample and its associated metadata between these instruments. Sophisticated scheduling software, often managed by AI components, coordinates this process, optimizing the queue times and resource utilization across the entire analytical lab. The successful orchestration of these multi-tech workflows results in a significant reduction in total analysis time and labor costs.

Instrument Standardization: Foundation for Seamless Automation and Multi-Tech Workflows

The fragmentation of analytical methodologies across different instrumentation platforms remains one of the largest obstacles to achieving true, enterprise-level automation in the analytical lab. Addressing this requires a concerted effort toward instrument standardization and the development of coherent multi-tech workflows. This principle applies equally to hardware, software interfaces, and data output formats.

Standardization of Hardware and Components

At the hardware level, standardization involves adopting common physical interfaces for sample containers, plate sizes, and transfer mechanisms (e.g., robotic gripping points). This ensures that a single liquid handling robot can seamlessly interact with instruments from diverse manufacturers. Furthermore, standardizing key analytical components, such as column chemistries or detector settings, across different analytical platforms allows for methods to be more easily transferred and validated. This consistency directly supports the goal of flexible, autonomous operation, particularly in environments designed for high-throughput analysis.

Standardizing Software and Data Outputs

The digital aspect of instrument standardization is even more critical. Data output from analytical instruments (e.g., raw spectral data, chromatograms) must adhere to non-proprietary, open data standards. This move away from vendor-locked file formats is essential for cross-platform data compatibility and long-term data archival. Data transformation and parsing pipelines consume significant laboratory resources when dealing with inconsistent formats; standardization eliminates this overhead.

In a unified digital environment, the implementation of multi-tech workflows becomes streamlined. Data acquired from a front-end instrument (e.g., UV-Vis spectrometer) is instantly available to feed decision-making logic for a subsequent instrument (e.g., an automated fractionation system). This seamless digital handoff, facilitated by standardized communication protocols (such as SiLA or AnIML), is what enables dynamic, responsive automation. For example, a quality control sample that fails an initial screening on a rapid analyzer can be automatically flagged and rerouted for detailed, confirmatory analysis on a high-resolution mass spectrometer—a genuine example of a flexible multi-tech workflow orchestrated by the data system.

Impact on Method Transfer and Scale-Up

The benefits of instrument standardization extend directly to method transfer and regulatory compliance. When instruments and data formats are consistent, moving an analytical method from a development laboratory to a quality control facility, or between global sites, is significantly simplified. This reduces the need for extensive re-validation and ensures consistency in global reporting. This level of consistency is foundational for feeding clean, reliable data into AI systems for performance monitoring and predictive modeling, reinforcing the interconnected nature of the three core pillars of the future analytical lab.

AI-Driven Method Validation and Multimodal Analysis in the Analytical Lab

The complexity of modern analytical data, often originating from diverse sources, necessitates sophisticated tools for quality assurance. The adoption of AI for method validation and multimodal analysis represents a significant leap forward in the reliability and efficiency of the analytical lab.

AI for Enhanced Method Validation

Traditional method validation is a resource-intensive process requiring extensive experimental runs to establish parameters such as accuracy, precision, linearity, and robustness. AI applications, specifically machine learning models, can be deployed to streamline several aspects of this process:

- Robustness Testing Simulation: AI can simulate the effects of minor changes in instrument parameters (temperature, flow rate, column age) on method performance, predicting areas of instability and guiding the selection of optimal operating ranges, thus reducing the number of physical experimental runs required.

- Data Quality Review: Trained AI models can rapidly and objectively review validation data for anomalies, subtle trends, or systematic errors that might be missed by human review. This ensures the data package submitted for validation is complete and consistent, significantly accelerating the regulatory approval process for new methods in the analytical lab.

- Cross-Validation Comparison: In cases of method transfer (instrument standardization), AI can compare performance metrics from the original and transferred methods, identifying statistically significant differences and providing a quantitative measure of equivalence.

The objective, tireless nature of AI significantly enhances the rigor of method validation, leading to more robust and reliable analytical procedures capable of supporting high-throughput operation.

Multimodal Analysis and Data Fusion

Multimodal analysis refers to the simultaneous acquisition and synergistic interpretation of data from two or more distinct analytical techniques. In an analytical lab, this often involves combining techniques like spectroscopy (e.g., Raman, FTIR), chromatography (e.g., LC, GC), and mass spectrometry (e.g., MS/MS, high-resolution MS) to generate a richer, more comprehensive chemical profile of a sample.

The resulting datasets are inherently complex, featuring data matrices that span several dimensions (e.g., retention time, mass-to-charge ratio, intensity, and spectral fingerprint). AI and multivariate statistical methods are the only feasible tools for extracting meaningful patterns from this fused data.

- Pattern Recognition: AI algorithms (such as deep learning networks) can identify subtle correlations between different data modes, allowing for definitive identification of complex molecules or the classification of samples based on subtle compositional differences (e.g., distinguishing between batches of raw materials).

- Predictive Modeling: By training on combined datasets, AI can build predictive models for sample properties (e.g., purity, efficacy, toxicity) that are more accurate than models built on single-modality data alone. This is particularly valuable in early-stage research and development.

The future of the analytical lab is defined by its ability to generate vast amounts of information via high-throughput, multi-tech workflows and subsequently use AI to perform multimodal analysis that transforms complex data into actionable, high-value scientific conclusions.

Securing the Scientific Edge Through Analytical Lab Automation and AI Adoption

The future of the analytical lab is irrevocably linked to the cohesive adoption of integrated data systems, process automation, and computational AI. This transformation moves the laboratory away from being a collection of disparate instruments and toward operating as a unified, intelligent system. The benefits of this convergence—increased throughput, reduced analytical variability, enhanced data integrity, and accelerated scientific discovery—are compelling drivers for investment.

For laboratory professionals, this evolution underscores the professional necessity of cultivating a strong command of data science principles and digital methodologies. Efforts in instrument standardization and the sophisticated management of multi-tech workflows are foundational requirements. Furthermore, the application of AI in areas such as method validation and multimodal analysis is redefining quality assurance and analytical depth. The laboratories that successfully navigate this transition will establish themselves as leaders in scientific rigor, efficiency, and innovation, securing a decisive edge in the rapidly evolving landscape of analytical science.

FAQ

What role does AI play in improving the accuracy of analytical lab results?

AI systems significantly enhance the accuracy and reliability of results in the analytical lab by automating and standardizing data processing steps that are often sources of human variability. Machine learning algorithms, trained on large volumes of validated data, can perform advanced signal processing, such as baseline estimation, peak deconvolution, and noise filtering, with higher consistency than human operators. Furthermore, AI enables sophisticated cross-referencing of results across sequential steps in multi-tech workflows, identifying inconsistencies that might suggest sample degradation or instrument drift. This capability for objective, continuous quality monitoring elevates the overall integrity of the scientific output, providing greater confidence in the reported data.

How does instrument standardization facilitate advanced automation?

Instrument standardization is crucial because it provides the essential interoperability required for robust, end-to-end automation. When analytical instruments adhere to common physical specifications (e.g., robotic sample handling interfaces) and, more importantly, common digital communication protocols, the systems can communicate and exchange data seamlessly. This eliminates the need for manual data translation or custom software integration layers between proprietary systems. Without this standardization, the coordination required for high-throughput operations and complex multi-tech workflows becomes prohibitively expensive and fragile, severely limiting the scale and flexibility of automated processes within the analytical lab.

What are the primary benefits of using AI for method validation?

The application of AI to method validation fundamentally improves the efficiency and robustness of analytical procedures. Rather than relying solely on empirical experimental runs, AI allows laboratories to use computational modeling to predict method performance under various conditions, significantly streamlining the optimization and robustness testing phases. AI can analyze large validation datasets to detect subtle non-linearities, heteroscedasticity, or systematic bias far more effectively than traditional statistical tools. This accelerated, more rigorous approach to method validation results in methods that are better understood, more stable, and faster to implement, which is critical for maintaining rapid turnover in a high-throughput analytical lab environment.

How do multi-tech workflows and multimodal analysis relate to high-throughput laboratories?

Multi-tech workflows and multimodal analysis are indispensable for maximizing the informational output of high-throughput laboratories. A multi-tech workflow leverages automation to sequentially move a sample through several distinct analytical techniques (e.g., LC, then MS, then NMR), all managed by a unified scheduling system. Multimodal analysis then applies AI to the complex, combined dataset generated by these instruments, fusing the different types of information (e.g., retention time, mass, and spectral features) to provide a richer, more accurate profile than any single technique could offer. This integrated approach ensures that the laboratory’s speed (high-throughput) is matched by the depth and quality of its scientific conclusions.

This article was created with the assistance of Generative AI and has undergone editorial review before publishing.